Abstract.

Sleep deprivation has a broad variety of effects on human performance and neural functioning that manifest themselves at different levels of description. On a macroscopic level, sleep deprivation mainly affects executive functions, especially in novel tasks. Macroscopic and mesoscopic effects of sleep deprivation on brain activity include reduced cortical responsiveness to incoming stimuli, reflecting reduced attention. On a microscopic level, sleep deprivation is associated with increased levels of adenosine, a neuromodulator that has a general inhibitory effect on neural activity. The inhibition of cholinergic nuclei appears particularly relevant, as the associated decrease in cortical acetylcholine seems to cause effects of sleep deprivation on macroscopic brain activity. In general, however, the relationships between the neural effects of sleep deprivation across observation scales are poorly understood and uncovering these relationships should be a primary target in future research.

Similar content being viewed by others

In 2004 a reality television program called ‘Shattered’ was broadcast on Channel 4 in the United Kingdom. In the show, a number of contestants struggled to stay awake for as long as possible. They remained awake for days on end (the winner managed to stay awake for 178 h) but at a considerable cost. Lack of sleep resulted in a severe loss in concentration capacity and extreme mood swings. At some point, the contestants began to behave in an erratic fashion and they even started to hallucinate and to have delusional thoughts (known as hypnagogia). These phenomena disappeared after one or two good nights’ sleep. It goes without saying that such dramatic behavioral effects reflect changes in functioning of the sleep-deprived nervous system.

Sleep loss can have various origins with pathological causes as first to mention. In this context, one discriminates between sleep disorders (dyssomnias) as such, like obstructive sleep apnoea, and other disorders that involve sleep loss, such as clinical depression, chronic pain, and restless-legs syndrome. Sleep loss can also be induced in test animals or human volunteers to investigate the neurobehavioral consequences of insufficient sleep. Moreover, as humans, we can choose to voluntarily adopt a pattern of waking and sleeping that opposes the body’s urge to rest, e.g., extensive traveling across time zones (chronic jet lag) and working on night shifts, which may lead to chronic sleep loss [1]. Chronic sleep loss, or sleep debt [2], is becoming increasingly common and affects millions of people, especially in more developed countries where sleep debt is probably due to the increase in working hours in certain professions, such as medicine [3].

In the literature on the neurobehavioral effects of sleep loss a distinction is made between sleep reduction, sleep fragmentation, and total sleep loss or sleep deprivation [4]. Sleep reduction refers to a situation in which an organism falls asleep later than usual or wakes up earlier than dictated by its physiological needs, while sleep fragmentation refers to the case in which an organism is permitted to sleep but wakes up and falls asleep again at certain intervals. In general, sleep fragmentation constitutes a clinical symptom, but it may also be induced experimentally in healthy subjects. Finally, sleep deprivation (SD) can be considered an extreme case of sleep reduction, in that the organism is simply awake for a prolonged period of time, as for example when one or more nights of sleep are skipped. The distinction between sleep reduction, sleep fragmentation, and SD serves mainly as a useful heuristic and is not assumed to imply qualitatively different neurobehavioral effects. For example, Bonnet and Arand [5] demonstrated that all these forms of sleep loss may lead to very similar effects on the experience of sleepiness, psychomotor performance, and hormonal disturbances.

In the present article, we will review part of the vast experimental literature on sleep loss involving human participants, in combination with a few selected animal studies. In particular, we will focus on the behavioral and neural effects of SD with the aim to elucidate the neurophysiological underpinnings of SD-induced deterioration in cognitive functioning and to identify directions for future research. To this end, we review the behavioral and neural effects of SD according to three levels of observation, called the macro-, meso- and micro-level [6–10]. With the term macro-level, we refer to macroscopic behaviors including cognition, emotional processes, muscle activity, kinematics, and motion, as well as collective activities of large, crudely defined brain structures, e.g., prefrontal cortex (PFC), thalamus, and hippocampus. The growing field of cognitive neuroscience can be considered an attempt to link macroscopic behavior with large-scale neural activity. Modern neuroimaging techniques like functional magnetic resonance imaging (fMRI) and electro- and/or magneto-encephalography (EEG and MEG) exploit the neurons’ overall metabolism or record synchronized firing behavior of neural assemblies and allow for zooming in on neural activity and ensembles at the meso-level. With this term, we refer to neural activity within large brain areas down to ensembles of a smaller number of cells. That is, ensembles at the meso-level still form more or less well-defined functional units, both in terms of structure, such as a hyper-column in the visual cortex [11], and activity, i.e., neural synchronization [see e.g., refs. 12, 13]. Finally, the micro-level refers to the molecular and cellular level, that is, the level of ion channels, gene products and protein synthesis. This is the level at which very fast chemical processes occur that constitute the physical or chemical building blocks of brain function. Even though the boundaries between macro-, meso- and micro-levels are not clear cut, they provide a useful heuristic for discussing effects of SD and linking SD phenomena across levels. Although we will review effects of SD on all scales, the emphasis will be on neural activity at the macro-level and at the meso-level, that is, on behavior and interactions among comparatively large brain structures. Where possible, we will establish a link between the macro- and/or meso-level and the micro-level, focusing on effects of SD on cortical activity in relation to impairment in cognitive performance. We will first establish a link between the behavioral effects of SD and its effects on large-scale brain activity of SD. Subsequently, we will show how effects of SD on behavior and brain activity (macro- and meso-levels) are matched by changes in cellular and molecular changes (micro-level) as reported in animal studies.

SD affects behavior and coarse-grained brain activity — the macro-level

Applied and fundamental research has identified a broad variety of detrimental consequences of SD on performance. In more applied fields, there is a major interest in groups of workers who customarily do not receive enough sleep [14–19]. For example, a study involving doctors working in a hospital reported that, after a 32-h shift, the resulting lack of sleep had a profound negative effect on mood and cognitive performance [3]. However, several studies also reported beneficial effects of SD, e.g., one night of SD can have beneficial effects on mood in the majority of patients diagnosed with depression, possibly through influences on the circadian rhythm and the arousal system [20–22]. Unfortunately, the depressive symptoms usually re-appear after a recovery sleep [23, 24]. In addition, epidemiological studies have shown that mild sleep restriction decreases mortality in long sleepers, putatively because sleep restriction might resemble dietary restriction as a potential aid to survival [25]. Moreover, spending excessive time in bed can elicit daytime lethargy and exacerbate sleep fragmentation, resulting in a vicious cycle of further time in bed and further sleep fragmentation. However, these beneficial effects of mild sleep restriction reverse at sleep durations of less than 6.5 h per night [25].

Mood, memory, and cognition

Fundamental research has shown that SD profoundly affects human functioning and that its effects are so widespread that it is difficult to find functions that remain untouched [26–28]. The literature further suggests that consolidation of newly learned material (e.g., novel words or novel perceptual skills) is disrupted after loss of a night’s sleep [29, 30]. Apart from affecting performance, sleep loss invariably leads to a strong subjective feeling of sleepiness, negative mood, and stress [28]. In extreme cases, SD might even lead to hallucinations, and test animals that are sleep-deprived for an indefinite period of time will eventually die. It remains unclear, however, whether the detrimental effects of SD on performance are simply due to a lack of sleep as such or to the accompanying stress [cf. ref. 31]. Stress typically results in a rise in cortisol levels, which, for example, led McEwen [32] to suggest that chronic SD acts as a chronic stressor that yields allostatic load on the body, which, in turn, negatively affects the immune and the nervous system. A study by Ruskin and colleagues [33], however, produced opposing results, in that both adrenolectomized rats, i.e., rats with a blocked adrenal stress system, and intact rats suffered to an equal degree from SD.

Recent studies have shown impaired performance of executive functioning including measures of verbal fluency, creativity, planning skills [4, 34], novelty processing [35], and driving performance [36]. The impact of SD is likely to be particularly prominent in tasks that strongly depend on attention, i.e., tasks that require other than well-learned automatic responses will be most vulnerable [37]. Besides the vast literature on SD-related performance decrements in novel tasks, there are also several studies showing that SD leads to performance deficits in vigilance tasks [28, 38–40]. These behavioral effects point to the involvement of brain structures that have been associated with attention and arousal. In particular, several studies have hinted at a central role for the PFC in relation to SD [4, 41, 42]. The PFC is a neocortical region that is known to support a diverse and flexible repertoire of behavioral functions and is most elaborated in primates. It consists of a massive network, connecting perceptual, motor, and limbic regions within the brain, and is important whenever top-down processing is needed, i.e., during executive functions and attention [43, 44]. Impaired attention and cognitive performance due to SD therefore suggest decreased brain activity and function in the PFC [45]. Similarly, the disrupted memory consolidation after SD indicates involvement of the hippocampus, a crucial structure involved in learning and the consolidation of newly learned material [46, 47]. Indeed, Hairston and colleagues [48] recently found that sleep restriction in the rat impaired hippocampus-dependent learning.

Staying awake

Another way to study the neural effects of sleep is by examining agents that promote wakefulness. The subjective feelings of sleepiness and the objective decrements in psychomotor functioning can be temporarily reversed by administering psychostimulants, such as caffeine. Caffeine is the most widely consumed stimulant in the world [49] and has been shown to produce significant alerting and long-lasting beneficial mood effects in sleep-deprived individuals [50]. Likewise, the military is actively seeking ways to prolong wakefulness in soldiers without hampering their performance in sustained operations. As a case in point, Baranski and colleagues [51] studied effects of the psychostimulant modafinil on mood and performance in a group of sleep-deprived soldiers. Interestingly, a dose of about 300 mg modafinil resulted in a level of cognitive performance that was virtually identical to baseline. Wesensten and colleagues [52] directly compared effects of caffeine, modafinil, and amphetamine on a group of healthy volunteers who had remained awake for 85 h. It was found that all agents improved vigilance, and that caffeine and modafinil improved executive functioning. For a review, describing in more detail the neuropharmacology of caffeine and other stimulants, see Boutrel and Koob [53]. In brief, caffeine seems to exert its effect on wakefulness via two complementary routes; on the one hand, it activates cholinergic neurons in the forebrain, while on the other, it inhibits sleep-promoting neurons in the hypothalamus. A behavioral method to promote wakefulness is to engage in challenging tasks that require a high degree of motivation [37]. Interestingly, even body posture affects the tendency to fall asleep: Caldwell and colleagues [54] found that sleep-deprived participants were better able to stay awake in a standing upright posture than when sitting, as demostrated by psycho-motor performance and EEG arousal.

SD affects more fine-grained brain activity — the meso-level

Over the years, a wide range of non-invasive methods has become available to study human brain activity [see e.g., ref. 55]. Broadly speaking, these methods fall in two categories. The first class of methods serves to accurately identify locations in the brain and is based on the premise that increased activity and recruitment of neural tissue is accompanied by an increased metabolism in terms of oxygen and/or glucose consumption. Well-known methods within this class are positron emission tomography (PET) and fMRI. While PET measures hemodynamic changes by marking blood with a radioactive tracer, fMRI measures the blood oxygenation level-dependent (BOLD) contrast [56–58]. As blood flow to specific brain region increases with increased neural activity, both techniques provide indirect measures of this activity. To extract the brain activity related to a specific cognitive function, one typically determines the difference in brain activity under baseline and activation conditions [58–60].

The second class of methods serves to study the temporal dynamics of neural activity. Well-known methods within this class are EEG and MEG. With EEG, one measures voltage or potential differences between different parts of the brain using electrodes placed on the scalp. With MEG, in contrast, one measures magnetic fields that derive from the flow of ionic currents in the (cortical) neuron dendrites [61]. In analyzing encephalographic data, identification of event-related potentials (ERPs in the case of EEG) or event-related fields (ERFs in the case of MEG) is a common technique. By averaging traces over multiple trials that are aligned at a specific event (usually, the onset of an external stimulus), brain activity related to cortical processing can be extracted. That is, one identifies certain time- or phase-locked components and omits unwanted ‘noise’, i.e., background activity that is not phase-locked to the event in question [62–64], yielding a good signal-to-noise ratio. A range of ERP and ERF components have been identified that reflect specific forms of information processing, related to sensory, motor, and/or cognitive functions, as well as specific subject characteristics, like age and health status [see e.g., refs. 65, 66].

Event-related components

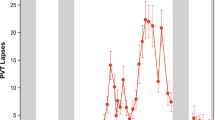

With respect to SD, two characteristic ERP/ERF components have received special attention in the literature, namely N1 and P300 (sometimes called P3). N1 represents a negative polarity deflection in the waveform about 100 ms after stimulus presentation. It is associated with the processing of auditory, visual, or tactile stimuli in primary sensory areas [cf. ref. 67]. However, N1 is not a purely sensory phenomenon but is affected by attention, e.g., the amplitude of the auditory N1 can be increased by simply asking participants to attend to one ear [68, 69] or by administering a small dose of caffeine [70, 71]. The importance of N1 as a marker for attentional processing is underscored by the clinical observation that patients with frontal lesions exhibit a reduction in N1 amplitude, and a concomitant disruption of attentional capacities [72]. Similarly, N1 amplitude reduction can also be induced by SD. For example, using an auditory oddball paradigm (in which participants had to respond to infrequently appearing target stimuli), Cote and colleagues [73] observed a marked N1 amplitude reduction in participants who had suffered a night of sleep fragmentation. N1 amplitude reduction after SD is not restricted to auditory responses [74–76], but is also observed for visual- [77] and motor-evoked potentials [76]. Figure 1 shows the reduction in the auditory- and motor-related field following SD. These experimental findings point to reduced responsiveness of sensory areas to peripheral input after SD due to reduced attention [76]. Similarly, the increased N1 amplitude after a small dose of caffeine has also been associated with attention [70, 71].

Reduction of the auditory- (upper panel) and motor-related (lower panel) response after SD. Left: spatial distribution of both responses showing event-related activity above either bilateral auditory areas or the left sensorimotor area (head is viewed from above using a Mercator-mapping; nose is upwards). Middle: the strength of event-related components in the control (blue) and sleep-deprived (red) condition. Right: the corresponding event-related fields [see ref. 76, for more details].

In contrast to N1, which is localized above sensory cortices, P300 appears as a positive deflection of the EEG voltage at the frontal scalp around 300 ms post-stimulus. P300 is associated with frontal lobe functioning and can be elicited by presenting the participant with a visual or auditory stimulus that is either unexpected or highly relevant for the task at hand [78]. Following SD, the onset of P300 is somewhat delayed and its amplitude reduced [4]. More recently, it has been suggested that two subtypes of P300 should be distinguished, a so-called ‘novel P3’ and a ‘target P3’ [35]. The novel P3 is thought to originate from the anterior cingulate cortex or the supplementary motor area and to be related to the detection of unexpected stimuli. The target P3, in contrast, is thought to reflect neural activity at the temporal-parietal junction and to be related to the detection of anticipated stimuli [79]. Consistent with this idea, Gosselin and colleagues [35] found that both novel and target P3 were reduced in amplitude after 36 hours of waking, and that performance on attention-demanding tasks was deteriorated. The authors concluded that SD affects a whole attentional network, consisting of several interconnected cortical regions.

As was the case for N1, caffeine affects P300 in a manner opposite to that of SD. Deslandes and colleagues [80] found in an oddball paradigm that administration of a 400-mg dose of caffeine reduced the latency and increased the amplitude of P300. Given the current collection of empirical observations, it seems reasonable to conclude that the amplitude reduction of N1 and P300 after SD and the amplitude enhancement of those components after a dose of caffeine are both related to changes in attention and thus to changes in sensory processing, albeit in opposite directions. This conclusion is further supported by the observation that both N1 and P300 are influenced by noradrenergic, dopaminergic, and cholinergic systems, i.e., systems which are known to mediate attention and arousal [81, 82].

Frequency contents of the encephalogram

Encephalographic patterns can also be characterized by the amplitude and frequency of electric (or magnetic) activity. The normal human EEG and MEG show amplitudes of 20–100 µV and a few hundred fT, respectively, and frequencies up to about 100 Hz. As explained, time-dependent changes of the neural activity can be addressed via event-related studies, whereas spectral analysis allows for investigating ongoing cortical dynamics, that is, activity that is not necessarily event-locked. The latter approach has a long tradition in the study of various stages of sleep and wakefulness as well as pathophysiological situations such as seizures. Traditionally, encephalographic frequencies are divided into several frequency bands: delta (1–4 Hz), theta (4–8 Hz), alpha (8–12 Hz), beta (13–30 Hz), and gamma (30–90 Hz). During sleep characteristic changes occur in the spectral power within those frequency bands [see e.g., refs. 83–85], which have been instrumental in the identification and subsequent definition of distinct sleep stages [86]. For instance, so-called slow-wave sleep (sleep stages III and IV) is characterized by strong delta activity and low responsiveness to external stimuli and might have a recovery function [87].

For sleep-deprived participants, the occurrence of slow-wave sleep is enhanced on the first recovery night after SD, whereas REM sleep, which is signified by strong desynchronization of the encephalogram, does not differ from the baseline value [84, 88–91]. In the waking EEG, a reduction in alpha activity after SD was already observed by Blake and Gerard [92] and has been replicated many times [for an overview, see ref. 31]. More recently, a progressive increase in theta activity during prolonged wakefulness has been reported [93, 94]. In general, the waking EEG appears to undergo changes in several frequency bands that might be attributed to circadian as well as homeostatic processes. The distinct variations in the theta and alpha band may represent electrophysiological correlates of different aspects of a circadian change in arousal [95–97]. Moreover, the combined increase in theta activity and decrease in alpha activity seems to correspond to a slowing of encephalographic rhythms, that is, a shift of spectral power toward lower frequencies. A prevalence of slow-wave activity might thus provide an index of sleep propensity and/or cortical deactivation [84, 91, 94, 98].

Spatial patterns of the encephalogram

Besides amplitude and frequency characteristics, SD affects the spatial distribution of encephalographic activity over the scalp. Several studies reported an increase in the degree of correlation between activity in both hemispheres as a result of prolonged wakeful-ness [see e.g., ref. 99, 100]. Furthermore, an anterior shift of activity has been reported after 24 h of wakefulness: the overall power, as well as the power contained in the alpha band, decreased at occipital and temporal channels and increased at central and frontal channels [76]. These results are consistent with encephalographic studies on the sleep-wake cycle that revealed an anterior shift of activity during the wakefulness-sleep transition [101–103]. The changes in encephalographic activity along the anterior-posterior axis seem to indicate that effects of SD are local and mainly pertain to prefrontal areas [104]. That is, increased synchronization of cortical activity, resulting in an increased amplitude of encephalographic activity, is thought of as a sign of cortical deactivation or cortical idling [63]. Hence, these data seem in line with fMRI results showing reduced activity in the PFC. Note, however, that the change in spatial distribution cannot be simply attributed to a single cortical structure, not least because of the low spatial resolution of encephalographic data.

Neuroimaging studies

The detrimental effect of SD on cognitive performance has instigated research aimed at pinpointing influences on the PFC using neuroimaging methods like PET and fMRI. As already indicated above, a number of studies have observed reduced neural activity in the dorsolateral PFC (see Fig. 2) following SD, and a subsequent decrement in cognitive performance [105–108]. Findings of decreased regional brain activity suggest that neurons cannot keep pace with high task load requirements in the case of complex task performance and/or sustained task performance during SD [108]. Other studies, most notably by the group of Drummond, however, found an opposite pattern of results, namely an increase in bilateral dorsolateral PFC activity induced by SD [105–108]. For example, in a verbal learning task, Drummond and colleagues [105] found increased brain activity in the dorsolateral PFC and parietal cortex after 35 h of wakefulness, although subsequent free recall was significantly impaired after SD. They concluded, therefore, that, depending on task difficulty, there can be dynamic, compensatory changes in neural recruitment and, furthermore, that those changes bear a striking resemblance to neural changes in normal aging. In support, Choo and colleagues [109] found that the increase in activity in the PFC after SD was dependent on working memory load. However, they concluded that the mixed results obtained from imaging studies following SD suggest that studying the neural correlates of working memory in this setting is more complex than originally envisioned.

Illustration of the lateral part (Brodmann’s areas 45 and 46 and the lateral parts of areas 9 to 12) and the ventromedial part of the PFC (the medial parts of areas 10 and 11). The dorsolateral PFC is engaged in executive functions such as working memory, whereas the ventromedial PFC is part of the limbic system involved in decision making and social cognition [42]. Figure adopted from Gazzaniga et al. [55].

Thus, there is still debate as to whether the neural activity in PFC increases or decreases after SD. In part, the discrepancy in the empirical findings regarding PFC activity might be caused by methodological differences, like the experimental task of choice, the amount of SD, the age of the participants, and the method used to analyze the data. Although all these factors affect PFC activity, its central role in SD and sleep is now well established [4, 42, 104]. As discussed above, the PFC is thought to play an important role in the higher-order cognitive impairments noted in various SD experiments. Thomas and coworkers [45] reported, besides a global decrease in brain activity, a decrease in activity throughout the thalamus and PFC, both of which are part of the network mediating alertness, attention and higher-order cognitive processes [110].

The prominence of the PFC in attentional processes has received considerable support and provides further insight into the functional role of the attentional network during SD [45, 111, 112]. In particular, the interaction between bottom-up arousal and top-down attention, as regulated through PFC, appears crucial [111, 112]. In this context, it is interesting to note that the PFC has also a top-down influence on the basal forebrain (BF) cholinergic system. The cholinergic system is part of the ascending activation system projecting to all cortical areas, but it only receives cortical input via the limbic system [110]. Hence, only the limbic system can effectively induce a rapid change of activity in these nuclei, and activation of these pathways provides a mechanism for modulating the neural responses to novel and motivationally relevant events, facilitating further processing of these events [110, 113, 114].

SD-related cellular and molecular changes — the micro-level

A neuromodulator that has been studied extensively in sleep research is adenosine (AD). AD is a purinergic messenger that regulates many physiological processes, particularly in excitable tissues such as heart and brain. It helps to couple the rate of energy expenditure to the energy supply by either reducing the activity of the tissue or by increasing the energy supply to the tissue. AD plays a variety of roles in the brain as intercellular messenger and has been shown to be involved in processes such as sleep regulation and arousal [115]. As a neuromodulator, AD has a general inhibitory effect on the release of most other neurotransmitters, such as the excitatory glutamate and acetylcholine [116–118], and thereby reduces excitability throughout the brain. Indeed, systemic or intracerebroventricular administration of AD has been shown to promote sleep and decrease the waking state [119]. The role of AD in sleep regulation has been supported further by studies showing a progressive increase of extracellular AD in the BF during prolonged wakefulness and a decrease during subsequent recovery sleep [120–123]. In addition, pharmacological manipulations involving AD receptors have shown that agonists generally promote sleep [124], whereas antagonists such as caffeine reduce sleep [125]. Hence, there is ample evidence that AD plays a central role as endogenous sleep factor [cf. refs. 118, 126].

Effects of SD on AD levels

Several mechanisms are capable of influencing the extracellular AD level, such as the modulation of AD anabolic and catabolic enzyme activity and AD transport rate through the cell membrane. Because of the presence of equilibrative transporters, regulation of intracellular AD concentrations is critical for the regulation of extracellular AD [115, 127]. Intracellular AD plays an important role in the energy metabolism of the cell and AD concentrations increase with cellular metabolism [128]. Hence, the increase in AD levels during waking is assumed to be caused by an increase in metabolic activity during waking which is reversed again during sleep [120, 129, 130]. The increase in extracellular AD is particularly manifest in the BF, where the concentration progressively increases with increased duration of waking to reach about twice that measured at the onset of the experiment [120]. BF is part of the arousal system of the brain and consists of cholinergic neurons that have long-range projections and are active during wake and REM sleep [131]. As a result, Strecker and colleagues [118] suggested that during prolonged wakefulness, extracellular AD accumulates selectively in the BF and promotes the transition from wakefulness to sleep by inhibiting cholinergic and non-cholinergic BF neurons. Indeed, in the BF it has been demonstrated that AD inhibits both cholinergic neurons and a subset of non-cholinergic neurons [132]. The exclusive role of the cholinergic BF neurons as transducers of sleep drive, however, has been challenged by a study on rats that were administered 192-IgG-saporin, resulting in a 95% lesion of the BF cholinergic neurons. Because these rats had intact sleep homeostasis, these results indicate that neither the activity of the BF cholinergic neurons nor the accumulation of AD in the BF during wakefulness is necessary for sleep drive [133].

Extracellular AD exerts its inhibitory effect through different AD receptors. Although there are four subtypes of AD receptors, A1, A2A, A2B, and A3, several lines of evidence indicate that only the A1 and A2A receptors are involved in mediating the sleep-inducing effect of AD [115, 127, 134]. In support, caffeine acts as an AD receptor antagonist, binds to the A1 and A2A receptors [49], but exerts its arousal effect mainly via the latter [135]. Again, however, the lack of AD A1 receptors does not prevent the homeostatic regulation of sleep [136], indicating that effects of AD are not restricted to either a single cell or receptor type, but are more general.

Effects of AD and acetylcholine on macroscopic brain activity

As also put forward by Strecker and colleagues [118], the build-up of AD during SD might play a central role in bringing about the effects of SD on macroscopic brain activity by inhibiting the ascending activating nuclei important for attention and arousal. In particular, the inhibition of the cholinergic nuclei results in reduced cortical levels of (ACh), which have been shown to result in similar macroscopic effects as SD. Although some of these studies were not carried out for the purpose of investigating the effects of SD, we will discuss part of this literature showing that the decrease in ACh and the increase in AD results in similar effects as SD. That is, the inhibitory effect of AD extends to the cholinergic neurons of the mesopontine tegmentum, where it also reduces the excitability of those neurons [137]. The cholinergic neurons form a continuous neural network extending from the basal ganglia and BF of the telencephalon to the ventral horn of the spinal cord and since the cholinergic nuclei have a widespread influence throughout the central nervous system, they are particularly capable of affecting macroscopic brain activity (see Fig. 3) [138].

Simplified diagram of the ascending activating system in the human brain. Adopted from http://web.lemoyne.edu/~hevern/psy340/lectures/; see text for further details.

First, the cholinergic neurons have a profound effect on thalamocortical arousal [81, 138–141]. Electrical stimulation of the cholinergic neurons or direct application of ACh results in prolonged depolarization of thalamocortical neurons affecting the behavioral state of arousal [142, 143]. The thalamocortical network plays a central role in the generation of cortical rhythms, i.e., the progressive hyperpolarization of the thalamocortical cells during sleep onset causes a shift toward large-amplitude, low-frequency oscillations, as measured with EEG and MEG [139]. Thus, the build-up of AD during SD could modulate brain oscillations indirectly by suppressing other neurotransmitter systems such as the cholinergic nuclei, and ACh may thus act as an endogenous mediator of SD-induced increases in slow-wave activity [130, 144, 145]. In addition, ACh can also affect cortical rhythms directly. For example, Szerb [146] showed in anesthetized cats that stimulation of the reticular formation produced increased cortical ACh and reduced cortical low-frequency activity as measured with EEG.

Second, reduced cortical ACh levels result in the attention-related effects on the amplitude of ERP/ERF components, such as the N1 and P300. The most common effect of ACh is an enhancement of the neural discharge evoked by sensory stimuli. Consequently, the cholinergic system modifies sensory processing in the cerebral cortex by modulating the effectiveness of afferent inputs to cortical neurons, which suggests that cortical ACh modulates the general efficacy of the cortical processing of sensory or associational information [141, 147, 148]. The effects of SD on N1 and P300 could thus be an effect of reduced cortical ACh levels caused by inhibition of the cholinergic neurons by AD. In support, animal research indicates that the middle-latency auditory-evoked potential ‘wave A’, which is the equivalent of the N1 in humans, was reduced in direct proportion to the number of damaged cholinergic cells [149]. Similarly, the ‘cat P300’ disappeared after septal lesions, and the lesioned cats were characterized by marked ACh depletion [150]. It therefore seems that cholinergic systems play an important, albeit not exclusive, role in the mediation of P300 potentials in cats [151]. Furthermore, the novelty-related P300 response can be abolished by cholinergic antagonists in human participants [152]. These data support the notion that cortical cholinergic inputs mediate diverse attentional functions and may be related to the attentional effects reported after SD [114, 141].

Moreover, the increased involvement of the PFC during SD, as reported in several studies [105–108], might be caused by deteriorated performance of cortical areas responsible for perceptual processing. For example, Furey and colleagues [153] found that ACh increased the selectivity of neural responses in extrastriate cortices during visual working memory, particularly during encoding, and increased the participation of ventral extrastriate cortex during memory maintenance. In parallel, they found decreased activation of the anterior PFC. These results indicate that cholinergic enhancement improves memory performance by augmenting the selectivity of perceptual processing during encoding, thereby simplifying processing demands during memory maintenance and reducing the need for prefrontal participation. Likewise, a reduced ACh level during SD would thus deteriorate perceptual processing and thereby place a higher burden on PFC, consistent with the notion of the compensatory role of PFC during SD [108].

Third, increased cortical AD levels also directly suppress cortical responses to incoming stimuli. Several studies have demonstrated that AD applied to the somatosensory cortex reduces the early components of the potentials evoked by peripheral sensory stimuli [154, 155] indicating that thalamocortical excitation is modulated by AD receptors. AD equally reduces thalamocortical inputs onto both inhibitory and excitatory neurons, suggesting that increased cortical AD levels result in a global reduction in the impact of incoming sensory information and thereby reduce the influence of sensory inputs on cortical activity (at least, in rodents) [156]. Again, these effects of AD on cortical activity are consistent with effects of SD on event-related encephalographic activity, i.e., both point at reduced effects of peripheral sensory stimuli on the cerebral cortex [cf. ref. 76]. In sum, the effects of increased AD and decreased ACh levels match the macroscopic effects of SD, such as the reduced ERP components and changes in EEG rhythms. Furthermore, the role of AD during SD has been established by various studies and shows how, by reducing excitability throughout the brain, the increase in AD may cause effects of SD as observed in brain activity on a macroscopic level.

Summary and final remarks

Both in terms of cause and effect, SD is a multifaceted phenomenon. Sleep loss, and the associated deterioration in performance, affects a large portion of the population and is thus of great social and economical importance [157]. On a macroscopic level, SD has widespread detrimental effects, influencing mood, memory, and cognitive performance. Most cognitive research agrees that SD mainly affects executive functions and is particularly manifest in novel tasks, while automated tasks are hardly affected. This readily hints at the involvement of neural structures related to attentional processes, most notably the PFC. The reduced amplitude of the N1 and P300 component in encephalographic recordings after SD mirrors effects of reduced attention and points to reduced cortical responsiveness to incoming stimuli. Neuroimaging studies (PET and fMRI) revealed effects of SD primarily in the PFC and thalamus, both of which are part of the attentional network involved in executive functioning. Similarly, the shift of encephalographic activity toward lower frequencies and the spatial shift of power toward frontal areas indicate, in all likelihood, reduced cortical arousal, particularly of the frontal areas.

Although neuroimaging and encephalography revealed mainly cortical effects of SD, residing predominantly in the PFC [104], little is known about the more detailed, microscopic processes that underlie those effects. When looking for potential sources, there is a longstanding agreement that the ascending nuclei in the reticular activating system are crucial for a normal sleep-wake cycle and are thus most likely involved in SD [cf. ref. 11]. The reticular activating system consists of several noradrenergic, dopaminergic, and cholinergic nuclei that project throughout the cortex and have a central role in attention and arousal [81, 114].In this review, we have mainly focused on the cholinergic system, as it is commonly believed that ACh is involved in attentional processes having SD-related effects on brain activity [cf. ref. 138]. Removal or blockage of cholinergic neurons reduces or disrupts the N1 and P300 components in encephalographic recordings. Because the cholinergic neurons innervate the thalamocortical network, which plays a central role in the generation of cortical rhythms, this cholinergic activity is likely related to the modification of cortical rhythms as observed during a sleep cycle and after SD. Several studies identified a strong increase of AD, particularly in the BF during SD, where AD has an inhibitory effect on the activity of the cholinergic nuclei [116–118]. These results support the notion that AD is a modulator of the sleepiness associated with prolonged wakefulness as extracellular AD accumulates selectively in the BF and cortex and promotes the transition from wakefulness to slow-wave sleep by inhibiting cholinergic neurons in the BF [118].

Although this pathway is unlikely to be the only neural process affected by SD, it nicely illustrates how phenomena and processes at the distinguished scales (i.e., macro-, meso-, and micro-levels) might interact, and how dissection of those interactions might provide insight into the underlying causes of the decline in human functioning after SD. In this regard, it seems particularly interesting to investigate further the role of the PFC during SD, with the explicit aim to uncover the neural processes underlying its alleged compensatory function. After all, although there is ample evidence for the involvement of the PFC in executive functioning and SD on a macroscopic level, it remains unclear how these functions are instantiated at a microscopic level. For example, the PFC has top-down connections to the reticular activating system and would thus, in principle, be capable of modulating, or compensating for, the reduced activity in the cholinergic neurons.

On a final note, we would like to remark that the SD literature is replete with attentional studies, while studies of effects of SD on the performance of perceptual-motor tasks are scarce. In fact, our recent study on rhythmic force production [76] seems an exception in this regard. Perceptual-motor tasks are known to differ greatly in the extent to which they call upon attentional and higher-order executive processes, with great demands for entirely novel complex tasks and movement sequences and little or no demands for fully automated skills, such as maintaining balance. In this particular context, a secondary cognitive task may be readily introduced besides a primary perceptual-motor task to objectively assess the degree of automation of the latter task [158]. This may provide a proper benchmark for evaluating the neural effects of SD in terms of attention demands and attentive processes. We believe that such a strategy may open the way for investigating the putative roles of the attentional network in the planning and execution of automated and non-automated perceptual-motor tasks in an integrated fashion, i.e., across micro-, meso-, and macro-levels.

References

Spiegel, K., Leproult, R. and Van Cauter, E. (1999) Impact of sleep debt on metabolic and endocrine function. Lancet 354, 1435–1439.

van Dongen, H.P.A., Rogers, N.L. and Dinges, D.F. (2003) Sleep debt: theoretical and empirical issues. Sleep Biol. Rhythms 1, 5–13.

Leonard, C., Fanning, N., Attwood, J. and Buckley, M. (1998) The effect of fatigue, sleep deprivation and onerous working hours on the physical and mental wellbeing of pre-registration house officers. Ir. J. Med. Sci. 167, 22–25.

Jones, K. and Harrison, Y. (2001) Frontal lobe function, sleep loss and fragmented sleep. Sleep Med. Rev. 5, 463–475.

Bonnet, M.H. and Arand, D.L. (2003) Clinical effects of sleep fragmentation versus sleep deprivation. Sleep Med. Rev. 7, 297–310.

Wilson, H.R. and Cowan, J.D. (1972) Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 12, 1–24.

Freeman, W.J. (1975) Mass Action in the Nervous System. Academic Press, New York.

Haken, H. (1996) Principles of Brain Functioning. Springer, Berlin.

Nunez, PL. (1995) Neocortical Dynamics and Human EEG Rhythms. Oxford University Press, New York.

Basar, E. (1998) Brain Function and Oscillations: Integrative Brain Function. Springer, Berlin.

Kandel, E.R., Schwartz, J.H. and Jessell, T.M. (1991) Principles in Neural Science. Appleton & Lange, East Norwalk.

Gray, C.M. and Singer, W. (1989) Stimulus-specific neuronal oscillations in orientation columns of cat visual cortex. Proc. Natl. Acad. Sci. USA 86, 1698–1702.

Fries, P., Reynolds, J.H., Rorie, A.E. and Desimone, R. (2001) Modulation of oscillatory neuronal synchronization by selective visual attention. Science 291, 1560–1563.

Dawson, D. and Campbell, S.S. (1991) Timed exposure to bright light improves sleep and alertness during simulated night shifts. Sleep 14, 511–516.

Harma, M., Tenkanen, L., Sjoblom, T, Alikoski, T and Heinsalmi, P. (1998) Combined effects of shift work and lifestyle on the prevalence of insomnia, sleep deprivation and daytime sleepiness. Scand. J. Work Environ. Health 24, 300–307.

Weibel, L., Spiegel, K., Gronfier, C., Follenius, M. and Brandenberger, G (1997) Twenty-four-hour melatonin and core body temperature rhythms: their adaptation in night workers. Am. J. Physiol. 41, R948–R954.

Wilkinson, R.T (1992) How fast should the night-shift rotate. Ergonomics 35, 1425–1446.

Akerstedt, T, Kecklund, G and Knutsson, A. (1991) Spectral-analysis of sleep electroencephalography in rotating 3-shift work. Scand. J. Work Environ. Health 17, 330–336.

Landrigan, C.P, Rothschild, J.M., Cronin, J.W, Kaushal, R., Burdick, E., Katz, J.T., Lilly, CM., Stone, PH., Lockley, S.W, Bates, D.W and Czeisler, C.A. (2004) Effect of reducing interns’ work hours on serious medical errors in intensive care units. N Engl. J. Med. 351, 1838–1848.

Vogel, GW, Vogel, F, McAbee, R.S. and Thurmond, A.J. (1980) Improvement of depression by REM sleep deprivation: new findings and a theory. Arch. Gen. Psychiatry 37, 247–253.

Wirz-Justice, A. and van den Hoofdakker, R.H. (1999) Sleep deprivation in depression: what do we know, where do we go ? Biol. Psychiatry 46, 445–453.

Pflug, B. and Tolle, R. (1971) Disturbance of the 24-hour rhythm in endogenous depression and the treatment of endogenous depression by sleep deprivation. Int. Pharmacopsychiatry 6, 187–196.

Wiegand, M., Riemann, D., Schreiber, W, Lauer, C.J. and Berger, M. (1993) Effect of morning and afternoon naps on mood after total sleep deprivation in patients with major depression. Biol. Psychiatry 33, 467–476.

Hemmeter, U, Bischof, R., Hatzinger, M., Seifritz, E. and Holsboer-Trachsler, E. (1998) Microsleep during partial sleep deprivation in depression. Biol. Psychiatry 43, 829–839.

Youngstedt, S.D. and Kripke, D.F. (2004) Long sleep and mortality: rationale for sleep restriction. Sleep Med. Rev. 8, 159–174.

Pilcher, J.J. and Huffcutt, A.I. (1996) Effects of sleep deprivation on performance: a meta-analysis. Sleep 19, 318–326.

Krueger, GP (1989) Sustained work, fatigue, sleep loss and performance — a review of the issues. Work Stress 3, 129–141.

Dinges, D.F, Pack, F, Williams, K., Gillen, K.A., Powell, J.W, Ott, GE., Aptowicz, C and Pack, A.I. (1997) Cumulative sleepiness, mood disturbance, and psychomotor vigilance performance decrements during a week of sleep restricted to 4 - 5 hours per night. Sleep 20, 267–277.

Stickgold, R. (2005) Sleep-dependent memory consolidation. Nature 437, 1272–1278.

Karni, A., Tanne, D., Rubenstein, B.S., Askenasy, J.J. and Sagi, D. (1994) Dependence on REM sleep of overnight improvement of a perceptual skill. Science 265, 679–682.

Horne, J.A. (1978) A review of the biological effects of total sleep deprivation in man. Biol. Psychol. 7, 55–102.

McEwen, B.S. (2006) Sleep deprivation as a neurobiologic and physiologic stressor: allostasis and allostatic load. Metabolism 55(Suppl. 2), S20–S23.

Ruskin, D.N, Dunn, K.E., Billiot, I., Bazan, NG and LaHoste, G.J. (2006) Eliminating the adrenal stress response does not affect sleep deprivation-induced acquisition deficits in the water maze. Life Sci. 78, 2833–2838.

Nilsson, J.P, Soderstrom, M., Karlsson, A.U, Lekander, M., Akerstedt, T, Lindroth, N.E. and Axelsson, J. (2005) Less effective executive functioning after one night’s sleep deprivation. J. Sleep Res. 14, 1–6.

Gosselin, A., De Koninck, J. and Campbell, K.B. (2005) Total sleep deprivation and novelty processing: implications for frontal lobe functioning. Clin. Neurophysiol. 116, 211–222.

De Valck, E. and Cluydts, R. (2001) Slow-release caffeine as a countermeasure to driver sleepiness induced by partial sleep deprivation. J. Sleep Res. 10, 203–209.

Harrison, Y and Horne, J.A. (2000) The impact of sleep deprivation on decision making: a review. J. Exp. Psychol. Appl. 6, 236–249.

Samkoff, J.S. and Jacques, C.H. (1991) A review of studies concerning effects of sleep deprivation and fatigue on residents’ performance. Acad. Med. 66, 687–693.

Belenky, G, Wesensten, N.J., Thorne, D.R., Thomas, M.L., Sing, H.C., Redmond, D.P, Russo, M.B. and Balkin, T.J. (2003) Patterns of performance degradation and restoration during sleep restriction and subsequent recovery: a sleep dose-response study. J. Sleep Res. 12, 1–12.

Jewett, M.E., Dijk, D.J., Kronauer, R.E. and Dinges, D.F. (1999) Dose-response relationship between sleep duration and human psychomotor vigilance and subjective alertness. Sleep 22, 171–179.

Killgore, W.D.S., Balkin, T.J. and Wesensten, N.J. (2006) Impaired decision making following 49 h of sleep deprivation. J. Sleep Res. 15, 7–13.

Muzur, A., Pace-Schott, E.F and Hobson, J.A. (2002) The prefrontal cortex in sleep. Trends Cogn. Sci. 6, 475–481.

Miller, E.K. and Cohen, J.D. (2001) An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202.

Desimone, R. and Duncan, J. (1995) Neural mechanisms of selective visual attention. Annu. Rev. Neurosci. 18, 193–222.

Thomas, M., Sing, H., Belenky, G., Holcomb, H., Mayberg, H., Dannals, R., Wagner, H., Thorne, D., Popp, K., Rowland, L., Welsh, A., Balwinski, S. and Redmond, D. (2000) Neural basis of alertness and cognitive performance impairments during sleepiness. I. Effects of 24 h of sleep deprivation on waking human regional brain activity. J. Sleep Res. 9, 335–352.

Bliss, T.V. and Collingridge, G.L. (1993) A synaptic model of memory: long-term potentiation in the hippocampus. Nature 361, 31–39.

Squire, L.R. and Zola-Morgan, S. (1991) The medial temporal lobe memory system. Science 253, 1380–1386.

Hairston, I.S., Little, M.T., Scanlon, M.D., Barakat, M.T., Palmer, T.D., Sapolsky, R.M. and Heller, H.C. (2005) Sleep restriction suppresses neurogenesis induced by hippocampus-dependent learning. J. Neurophysiol. 94, 4224–4233.

Fredholm, B.B., Battig, K., Holmen, J., Nehlig, A. and Zvartau, E.E. (1999) Actions of caffeine in the brain with special reference to factors that contribute to its widespread use. Pharmacol. Rev. 51, 83–133.

Penetar, D., McCann, U., Thorne, D., Kamimori, G., Galin-ski, C., Sing, H., Thomas, M. and Belenky, G. (1993) Caffeine reversal of sleep deprivation effects on alertness and mood. Psychopharmacology (Berl.) 112, 359–365.

Baranski, J.V., Cian, C., Esquivie, D., Pigeau, R.A. and Raphel, C. (1998) Modafinil during 64 hr of sleep deprivation: dose-related effects on fatigue, alertness, and cognitive performance. Military Psychol. 10, 173–193.

Wesensten, N.J., Killgore, W.D. and Balkin, T.J. (2005) Performance and alertness effects of caffeine, dextroamphet-amine, and modafinil during sleep deprivation. J. Sleep. Res. 14, 255–266.

Boutrel, B. and Koob, G.F. (2004) What keeps us awake: the neuropharmacology of stimulants and wakefulness-promoting medications. Sleep 27, 1181–1194.

Caldwell, J.A., Prazinko, B. and Caldwell, J.L. (2003) Body posture affects electroencephalographic activity and psychomotor vigilance task performance in sleep-deprived subjects. Clin. Neurophysiol. 114, 23–31.

Gazzaniga, M., Ivry, R. and Mangun, G.R. (2002) Cognitive Neuroscience: The Biology of the Mind, 2nd ed. Norton, New York.

Ogawa, S., Lee, T.M., Kay, A.R. and Tank, D.W. (1990) Brain magnetic resonance imaging with contrast dependent on blood oxygenation. Proc. Natl. Acad. Sci. USA 87, 9868–9872.

Kwong, K.K., Belliveau, J.W., Chesler, D.A., Goldberg, I.E., Weisskoff, R.M., Poncelet, B.P., Kennedy, D.N., Hoppel, B.E., Cohen, M.S., Turner, R., Cheng, H., Brady, T.J. and Rosen, B.R. (1992) Dynamic magnetic resonance imaging of human brain activity during primary sensory stimulation. Proc. Natl. Acad. Sci. USA 89, 5675–5679.

Cabeza, R. and Nyberg, L. (2000) Imaging cognition II: An empirical review of 275 PET and fMRI studies. J. Cogn. Neurosci. 12, 1–47.

Worsley, K.J., Marrett, S., Neelin, P., Vandal, A.C., Friston, K.J. and Evans, A.C. (1996) A unified statistical approach for determining significant signals in images of cerebral activation. Human Brain Mapp. 4, 58–73.

Friston, K.J., Frith, C.D., Liddle, P.F. and Frackowiak, R.S. (1991) Comparing functional (PET) images: the assessment of significant change. J. Cereb. Blood Flow Metab. 11, 690–699.

Hamalainen, M., Hari, R., Ilmoniemi, R.J., Knuutila, J. and Lounasmaa, O.V. (1993) Magnetoencephalography — theory, instrumentation, and applications to noninvasive studies of the working human brain. Rev. Modern Phys. 65, 413–497.

Klimesch, W., Schack, B., Schabus, M., Doppelmayr, M., Gruber, W. and Sauseng, P. (2004) Phase-locked alpha and theta oscillations generate the P1-N1 complex and are related to memory performance. Brain. Res. Cogn. Brain Res. 19, 302–316.

Pfurtscheller, G. and Lopes da Silva, F.H. (1999) Event-related EEG/MEG synchronization and desynchronization: basic principles. Clin. Neurophysiol. 110, 1842–1857.

Boonstra, T.W., Daffertshofer, A., Peper, C.E. and Beek, PJ. (2006) Amplitude and phase dynamics associated with acoustically paced finger tapping. Brain Res. 1109, 60–69.

Ford, J.M., White, P., Lim, K.O. and Pfefferbaum, A. (1994) Schizophrenics have fewer and smaller P300 s — a single-trial analysis. Biol. Psychiatry 35, 96–103.

Ford, J.M. and Pfefferbaum, A. (1991) Event-related potentials and eyeblink responses in automatic and controlled processing — effects of age. Electroencephalogr. Clin. Neurophysiol. 78, 361–377.

Rennie, CJ., Robinson, PA. and Wright, JJ. (2002) Unified neurophysical model of EEG spectra and evoked potentials. Biol. Cybern. 86, 457–471.

Woldorff, M.G and Hillyard, S. A. (1991) Modulation of early auditory processing during selective listening to rapidly presented tones. Electroencephalogr. Clin. Neurophysiol. 79, 170–191.

Näätänen, R. and Winkler, I. (1999) The concept of auditory stimulus representation in cognitive neuroscience. Psychol. Bull. 125, 826–859.

Lorist, M.M., Snel, J., Kok, A. and Mulder, G (1994) Influence of caffeine on selective attention in well-rested and fatigued subjects. Psychophysiology 31, 525–534.

Lorist, M.M., Snel, J. and Kok, A. (1994) Influence of caffeine on information-processing stages in well rested and fatigued subjects. Psychopharmacology 113, 411–421.

Knight, R.T., Grabowecky, M.F and Scabini, D. (1995) Role of human prefrontal cortex in attention control. Adv. Neurol. 66, 21–34.

Cote, K.A., Milner, C.E., Osip, S.L., Ray, L.B. and Baxter, K.D. (2003) Waking quantitative electroencephalogram and auditory event-related potentials following experimentally induced sleep fragmentation. Sleep 26, 687–694.

Atienza, M., Cantero, J. and Escera, C. (2001) Auditory information processing during human sleep as revealed by event-related brain potentials. Clin. Neurophysiol. 112, 2031–2045.

Ferrara, M., De Gennaro, L., Ferlazzo, F and Curcio, G (2002) Topographical changes in N1-P2 amplitude upon awakening from recovery sleep after slow-wave sleep deprivation. Clin. Neurophysiol. 113, 1183–1190.

Boonstra, T.W, Daffertshofer, A. and Beek, PJ. (2005) Effects of sleep deprivation on event-related fields and alpha activity during rhythmic force production. Neurosci. Lett. 388, 27–32.

Corsi-Cabrera, M., Arce, C., Del Rio-Portilla, I.Y., Perez-Garci, E. and Guevara, M.A. (1999) Amplitude reduction in visual event-related potentials as a function of sleep deprivation. Sleep 22, 181–189.

Pritchard, WS. (1981) Psychophysiology of P300. Psychol. Bull. 89, 506–540.

Dien, J., Frishkoff, GA., Cerbone, A. and Tucker, D.M. (2003) Parametric analysis of event-related potentials in semantic comprehension: evidence for parallel brain mechanisms. Brain Res. Cogn. Brain. Res. 15, 137–153.

Deslandes, A.C., Veiga, H., Cagy, M., Piedade, R., Pompeu, F and Ribeiro, P. (2004) Effects of caffeine on visual evoked potential (P300) and neuromotor performance. Arq. Neuro-psiquiatr. 62, 385–390.

Coull, J.T. (1998) Neural correlates of attention and arousal: insights from electrophysiology, functional neuroimaging and psychopharmacology. Prog. Neurobiol- 55, 343–361.

Robbins, T.W. (1997) Arousal systems and attentional processes. Biol. Psychol. 45, 57–71.

Dement, W and Kleitman, N (1957) Cyclic variations in EEG during sleep and their relation to eye movements, body motility, and dreaming. Electroencephalogr. Clin. Neuro-physiol. 9(Suppl. 4), 673–690.

Borbely, A.A., Baumann, F., Brandeis, D., Strauch, I. and Lehmann, D. (1981) Sleep deprivation: effect on sleep stages and EEG power density in man. Electroencephalogr. Clin. Neurophysiol. 51, 483–495.

Feinberg, I., Koresko, R.L. and Heller, N. (1967) EEG sleep patterns as a function of normal and pathological aging in man. J. Psychiatr. Res. 5, 107–144.

Gaillard, J.M. (1980) Electrophysiological semeiology of sleep. Experientia 36, 3–6.

Horne, J. (1992) Human slow wave sleep: a review and appraisal of recent findings, with implications for sleep functions, and psychiatric illness. Experientia 48, 941–954.

Trachsel, L., Tobler, I. and Borbely, A.A. (1989) Effect of sleep deprivation on EEG slow wave activity within non-REM sleep episodes in the rat. Electroencephalogr. Clin. Neurophysiol. 73, 167–171.

Dijk, D.J., Hayes, B. and Czeisler, C.A. (1993) Dynamics of electroencephalographic sleep spindles and slow wave activity in men: effect of sleep deprivation. Brain Res. 626, 190–199.

Achermann, P., Dijk, D.J., Brunner, D.P. and Borbely, A.A. (1993) A model of human sleep homeostasis based on EEG slow-wave activity: quantitative comparison of data and simulations. Brain Res. Bull. 31, 97–113.

Brunner, D.P., Dijk, D.J. and Borbely, A.A. (1993) Repeated partial sleep deprivation progressively changes in EEG during sleep and wakefulness. Sleep 16, 100–113.

Blake, H. and Gerard, R. (1937) Brain potentials during sleep. Am. J. Physiol. 119, 692–703.

Finelli, L.A., Baumann, H., Borbely, A.A. and Achermann, P. (2000) Dual electroencephalogram markers of human sleep homeostasis: correlation between theta activity in waking and slow-wave activity in sleep. Neuroscience 101, 523–529.

Cajochen, C., Brunner, D.P., Krauchi, K., Graw, P. and Wirz-Justice, A. (1995) Power density in theta/alpha frequencies of the waking EEG progressively increases during sustained wakefulness. Sleep 18, 890–894.

Aeschbach, D., Matthews, J.R., Postolache, T.T., Jackson, M.A., Giesen, H.A. and Wehr, T.A. (1997) Dynamics of the human EEG during prolonged wakefulness: evidence for frequency-specific circadian and homeostatic influences. Neurosci. Lett. 239, 121–124.

Aeschbach, D., Matthews, J.R., Postolache, T.T., Jackson, M.A., Giesen, H.A. and Wehr, T.A. (1999) Two circadian rhythms in the human electroencephalogram during wakefulness. Am. J. Physiol. 277, R1771–R1779.

van Dongen, H.P. and Dinges, D.F. (2005) Sleep, circadian rhythms, and psychomotor vigilance. Clin. Sports Med. 24, 237–249, VII–VIII.

Borbely, A.A. (1982) Sleep regulation. Introduction. Hum. Neurobiol. 1, 161–162.

Corsi-Cabrera, M., Ramos, J. and Meneses, S. (1989) Effect of normal sleep and sleep deprivation on interhemispheric correlation during subsequent wakefulness in man. Electro-encephalogr. Clin. Neurophysiol. 72, 305–311.

Kim, H., Guilleminault, C., Hong, S., Kim, D., Kim, S., Go, H. and Lee, S. (2001) Pattern analysis of sleep-deprived human EEG. J. Sleep Res. 10, 193–201.

Werth, E., Achermann, P. and Borbely, A.A. (1996) Brain topography of the human sleep EEG: antero-posterior shifts of spectral power. Neuroreport 8, 123–127.

Cantero, J., Atienza, M., Gomez, C. and Salas, R. (1999) Spectral structure and brain mapping of human alpha activities in different arousal states. Neuropsychobiology 39, 110–116.

De Gennaro, L., Ferrara, M., Curcio, G. and Cristiani, R. (2001) Antero-posterior EEG changes during the wakefulness-sleep transition. Clin. Neurophysiol. 112, 1901–1911.

Horne, J.A. (1993) Human sleep, sleep loss and behaviour: implications for the prefrontal cortex and psychiatric disorder. Br. J. Psychiatry. 162, 413–419.

Drummond, S.P.A., Meloy, M.J., Yanagi, M.A., Off, H.J. and Brown, G.G. (2005) Compensatory recruitment after sleep deprivation and the relationship with performance. Psychiatry Res-Neuroimag. 140, 211–223.

Drummond, S.P.A., Brown, G.G., Salamat, J.S. and Gillin, J.C. (2004) Increasing task difficulty facilitates the cerebral compensatory response to total sleep deprivation. Sleep 27, 445–451.

Chee, M.W.L. and Choo, W.C. (2004) Functional imaging of working memory after 24 hr of total sleep deprivation. J. Neurosci. 24, 4560–4567.

Drummond, S.P.A., Brown, G.G., Gillin, J.C., Stricker, J.L., Wong, E.C. and Buxton, R.B. (2000) Altered brain response to verbal learning following sleep deprivation. Nature 403, 655–657.

Choo, W.C., Lee, W.W., Venkatraman, V., Sheu, F.S. and Chee, M.W.L. (2005) Dissociation of cortical regions modulated by both working memory load and sleep deprivation and by sleep deprivation alone. Neuroimage 25, 579–587.

Mesulam, M.M. (1998) From sensation to cognition. Brain 121, 1013–1052.

Foucher, J.R., Otzenberger, H. and Gounot, D. (2004) Where arousal meets attention: a simultaneous fMRI and EEG recording study. Neuroimage 22, 688–697.

Portas, C.M., Rees, G., Howseman, A.M., Josephs, O., Turner, R. and Frith, C.D. (1998) A specific role for the thalamus in mediating the interaction of attention and arousal in humans. J. Neurosci. 18, 8979–8989.

Ghashghaei, H.T. and Barbas, H. (2001) Neural interaction between the basal forebrain and functionally distinct pre-frontal corticesin the rhesus monkey. Neuroscience 103, 593–614.

Sarter, M. and Bruno, J.P. (2000) Cortical cholinergic inputs mediating arousal, attentional processing and dreaming: differential afferent regulation of the basal forebrain by telencephalic and brainstem afferents. Neuroscience 95, 933–952.

Dunwiddie, T.V. and Masino, S.A. (2001) The role and regulation of adenosine in the central nervous system. Annu. Rev. Neurosci. 24, 31–55.

Dunwiddie, T.V. and Hoffer, B.J. (1980) Adenine nucleotides and synaptic transmission in the in vitro rat hippocampus. Br. J. Pharmacol. 69, 59–68.

Kocsis, J.D., Eng, D.L. and Bhisitkul, R.B. (1984) Adenosine selectively blocks parallel-fiber-mediated synaptic potentials in rat cerebellar cortex. Proc. Natl. Acad. Sci. USA 81, 6531–6534.

Strecker, R.E., Morairty, S., Thakkar, M.M., Porkka-Heiskanen, T., Basheer, R., Dauphin, L.J., Rainnie, D.G., Portas, C.M., Greene, R.W. and McCarley, R.W. (2000) Adenosinergic modulation of basal forebrain and preoptic/anterior hypothalamic neuronal activity in the control of behavioral state. Behav. Brain Res. 115, 183–204.

Radulovacki, M., Virus, R.M., Djuricic-Nedelson, M. and Green, R.D. (1984) Adenosine analogs and sleep in rats. J. Pharmacol. Exp. Ther. 228, 268–274.

Porkka-Heiskanen, T., Strecker, R.E., Thakkar, M., Bjorkum, A.A., Greene, R.W. and McCarley, R.W. (1997) Adenosine: a mediator of the sleep-inducing effects of prolonged wakefulness. Science 276, 1265–1268.

Basheer, R., Porkka-Heiskanen, T., Strecker, R.E., Thakkar, M.M. and McCarley, R.W. (2000) Adenosine as a biological signal mediating sleepiness following prolonged wakefulness. Biol. Signals Receptors 9, 319–327.

Porkka-Heiskanen, T., Strecker, R.E. and McCarley, R.W. (2000) Brain site-specificity of extracellular adenosine concentration changes during sleep deprivation and spontaneous sleep: an in vivo microdialysis study. Neuroscience 99, 507–517.

Murillo-Rodriguez, E., Blanco-Centurion, C., Gerashchenko, D., Salin-Pascual, R.J. and Shiromani, P.J. (2004) The diurnal rhythm of adenosine levels in the basal forebrain of young and old rats. Neuroscience 123, 361–370.

Portas, C.M., Thakkar, M., Rainnie, D.G., Greene, R.W. and McCarley, R.W. (1997) Role of adenosine in behavioral state modulation: a microdialysis study in the freely moving cat. Neuroscience 79, 225–235.

Lin, A.S., Uhde, T.W., Slate, S.O. and McCann, U.D. (1997) Effects of intravenous caffeine administered to healthy males during sleep. Depress. Anxiety 5, 21–28.

Radulovacki, M. (2005) Adenosine sleep theory: how I postulated it. Neurol. Res. 27, 137–138.

Fredholm, B.B., Chen, J.F., Masino, S.A. and Vaugeois, J.M. (2005) Actions of adenosine at its receptors in the CNS: insights from knockouts and drugs. Annu. Rev. Pharmacol. Toxicol. 45, 385–412.

Latini, S. and Pedata, F. (2001) Adenosine in the central nervous system: release mechanisms and extracellular concentrations. J. Neurochem. 79, 463–484.

Benington, J.H. and Heller, H.C. (1995) Restoration of brain energy metabolism as the function of sleep. Prog. Neurobiol. 45, 347–360.

Benington, J.H., Kodali, S.K. and Heller, H.C. (1995) Stimulation of A(1) adenosine receptors mimics the electro-encephalographic effects of sleep-deprivation. Brain Res. 692, 79–85.

Manns, I.D., Alonso, A. and Jones, B.E. (2000) Discharge properties of juxtacellularly labeled and immunohistochemically identified cholinergic basal forebrain neurons recorded in association with the electroencephalogram in anesthetized rats. J. Neurosci. 20, 1505–1518.

Arrigoni, E., Chamberlin, N.L., Saper, C.B. and McCarley, R.W. (2006) Adenosine inhibits basal forebrain cholinergic and noncholinergic neurons in vitro. Neuroscience 140, 403–413.

Blanco-Centurion, C., Xu, M., Murillo-Rodriguez, E., Gerashchenko, D., Shiromani, A.M., Salin-Pascual, R.J., Hof, P.R. and Shiromani, P.J. (2006) Adenosine and sleep homeostasis in the basal forebrain. J. Neurosci. 26, 8092–8100.

Satoh, S., Matsumura, H., Suzuki, F. and Hayaishi, O. (1996) Promotion of sleep mediated by the A2A-adenosine receptor and possible involvement of this receptor in the sleep induced by prostaglandin D2 in rats. Proc. Natl. Acad. Sci. USA 93, 5980–5984.

Huang, Z.L., Qu, W.M., Eguchi, N., Chen, J.F., Schwarzschild, M.A., Fredholm, B.B., Urade, Y. and Hayaishi, O. (2005) Adenosine A2A, but not A1, receptors mediate the arousal effect of caffeine. Nat. Neurosci. 8, 858–859.

Stenberg, D., Litonius, E., Halldner, L., Johansson, B., Fredholm, B.B. and Porkka-Heiskanen, T. (2003) Sleep and its homeostatic regulation in mice lacking the adenosine A1 receptor. J. Sleep Res. 12, 283–290.

Rainnie, D.G., Grunze, H.C., McCarley, R.W. and Greene, R.W. (1994) Adenosine inhibition of mesopontine cholinergic neurons: implications for EEG arousal. Science 263, 689–692.

Woolf, N.J. (1996) Global and serial neurons form a hierarchically arranged interface proposed to underlie memory and cognition. Neuroscience 74, 625–651.

Steriade, M., McCormick, D.A. and Sejnowski, T.J. (1993) Thalamocortical oscillations in the sleeping and aroused brain. Science 262, 679–685.

Mesulam, M.M. (1995) Cholinergic pathways and the ascending reticular activating system of the human brain. Ann. N Y Acad. Sci. 757, 169–179.

Everitt, B.J. and Robbins, T.W. (1997) Central cholinergic systems and cognition. Annu. Rev. Psychol. 48, 649–684.

Steriade, M., Dossi, R.C. and Nunez, A. (1991) Network modulation of a slow intrinsic oscillation of cat thalamocortical neurons implicated in sleep delta waves: cortically induced synchronization and brainstem cholinergic suppression. J. Neurosci. 11, 3200–3217.

Curro Dossi, R., Pare, D. and Steriade, M. (1991) Short-lasting nicotinic and long-lasting muscarinic depolarizing responses of thalamocortical neurons to stimulation of mesopontine cholinergic nuclei. J. Neurophysiol. 65, 393–406.

Landolt, H.P, Retey, J.V., Tonz, K., Gottselig, J.M., Khatami, R., Buckelmuller, I. and Achermann, P. (2004) Caffeine attenuates waking and sleep electroencephalographic markers of sleep homeostasis in humans. Neuropsychopharmacology 29, 1933–1939.

Gritti, I., Mainville, L. and Jones, B.E. (1993) Codistribution of gaba- with acetylcholine-synthesizing neurons in the basal forebrain of the rat. J. Comp. Neurol. 329, 438–457.

Szerb, J.C. (1967) Cortical acetylcholine release and electroencephalographic arousal. J. Physiol. 192, 329–343.

Donoghue, J.P and Carroll, K.L. (1987) Cholinergic modulation of sensory responses in rat primary somatic sensory cortex. Brain Res. 408, 367–371.

Sarter, M. and Bruno, J.P. (1997) Cognitive functions of cortical acetylcholine: toward a unifying hypothesis. Brain. Res. Brain. Res. Rev. 23, 28–46.

Harrison, J.B., Woolf, N.J. and Buchwald, J.S. (1990) Cholinergic neurons of the feline pontomesencephalon. I. Essential role in ‘wave A’ generation. Brain Res. 520, 43–54.

Harrison, J.B., Buchwald, J.S., Kaga, K., Woolf, N.J. and Butcher, L.L. (1988) ’cat P300’ disappears after septal lesions. Electroencephalogr. Clin. Neurophysiol. 69, 55–64.

Kaga, K., Harrison, J.B., Butcher, L.L., Woolf, N.J. and Buchwald, J.S. (1992) Cat ‘P300’ and cholinergic septohippocampal neurons: depth recordings, lesions, and choline acetyltransferase immunohistochemistry. Neurosci. Res. 13, 53–71.

Hammond, E.J., Meador, K.J., Aung-Din, R. and Wilder, B.J. (1987) Cholinergic modulation of human P3 event-related potentials. Neurology 37, 346–350.

Furey, M.L., Pietrini, P. and Haxby, J.V. (2000) Cholinergic enhancement and increased selectivity of perceptual processing during working memory. Science 290, 2315–2319.

Petrescu, G. and Haulica, I. (1983) Preliminary data concerning adenosine action upon the evoked potential after electrical stimulation of the vibrissal follicles in the adult rat. Physiologie 20, 229–233.

Addae, J.I. and Stone, T.W. (1988) Purine receptors and kynurenic acid modulate the somatosensory evoked potential in rat cerebral cortex. Electroencephalogr. Clin. Neurophysiol. 69, 186–189.

Fontanez, D.E. and Porter, J.T. (2006) Adenosine A1 receptors decrease thalamic excitation of inhibitory and excitatory neurons in the barrel cortex. Neuroscience 137, 1177–1184.

Colten, H.R. and Altevogt, B.M. (2006) Sleep disorders and sleep deprivation: an unmet public health problem. The National Academic Press, Washington, DC.

Maxwell, J.P., Masters, R.S. and Eves, FF (2000) From novice to no know-how: a longitudinal study of implicit motor learning. J. Sports Sci. 18, 111–120.

Author information

Authors and Affiliations

Corresponding author

Additional information

Received 24 October 2006; received after revision 23 November 2006; accepted 22 January 2007

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License ( https://creativecommons.org/licenses/by-nc/2.0 ), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Boonstra, T.W., Stins, J.F., Daffertshofer, A. et al. Effects of sleep deprivation on neural functioning: an integrative review. Cell. Mol. Life Sci. 64, 934 (2007). https://doi.org/10.1007/s00018-007-6457-8

Published:

DOI: https://doi.org/10.1007/s00018-007-6457-8